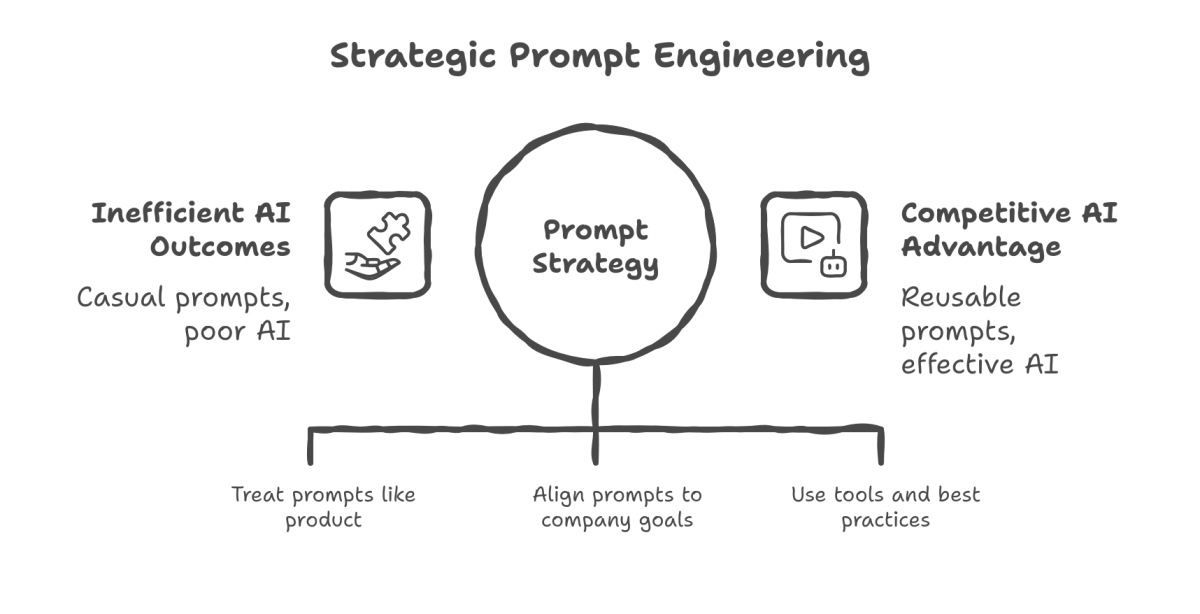

“Most companies treat prompts like throwaway inputs — but the smart ones are building full-scale prompt systems that drive real business outcomes.” – Mike Moisio, Founder of Lingra.ai

Prompt engineering isn’t just about tinkering with clever inputs to ChatGPT. It’s fast becoming a strategic business capability. Every interaction with a large language model (LLM) begins with a prompt – essentially instructions that guide the AI’s output.

If you’re a business leader infusing AI into products or operations, it’s no longer enough to treat prompts as casual inputs. The way your organization designs and manages prompts is now directly tied to efficiency, quality, and trust in AI outcomes.

In other words, prompts are now a business layer of your software. This article explores how to build a prompt strategy that translates your business objectives into effective AI behavior.

We’ll cover why prompts should be handled like a product (with design, testing, and governance), how to architect prompt systems aligned to company goals, and tools and practices for prompt engineering at scale.

By the end, you’ll see prompts not as one-off tricks, but as reusable assets and processes that give your organization a competitive edge in the AI era.

“A well-crafted prompt is like a recipe for your AI chef – get the ingredients and instructions right, and you consistently serve up the desired outcome. Treating prompts as strategic assets ensures your AI systems behave reliably and in line with your business taste.”

Prompts as the New Business Interface Layer

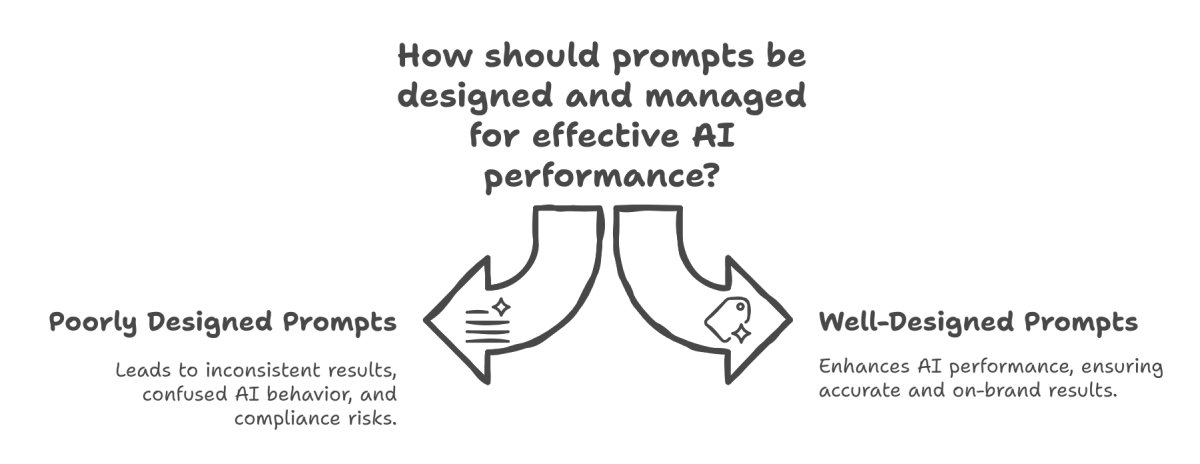

At the core of every LLM interaction is a prompt – and that prompt effectively acts as the UI between human intent and machine execution. Unlike a traditional API call, which is a rigid, well-defined request, a prompt is a fuzzy interface written in natural language[3]. This fuzziness is powerful (you can ask for virtually anything) but also risky (the AI might interpret instructions in unintended ways).

That’s why forward-thinking companies treat prompts as a first-class component of their AI stack, on par with UI design or API development. A prompt strategy means developing standardized, well-tested “inputs” that reliably produce outputs aligned with your business needs.

Why is this so important?

Think of prompts as a new layer of business logic. Poorly designed prompts can lead to inconsistent results, confused AI behavior, and even compliance or brand risks. Conversely, well-designed prompts can make an average model perform like a tailored expert.

For example, a legal team using an LLM to draft contract summaries will only trust the system if the prompt consistently yields accurate, on-brand summaries. As one tech consultant put it, prompt engineering should be seen not as a dev hack but as a first-class component in your AI stack, deserving the same design, testing, and lifecycle management as code or APIs[4].

In practice, this means your AI and product teams should collaborate on prompt development with the same rigor given to software features: iterate on prompt wording, enforce version control, and ensure prompts meet quality standards before deploying them into mission-critical workflows.

Designing Prompt Architectures Aligned with Company Goals

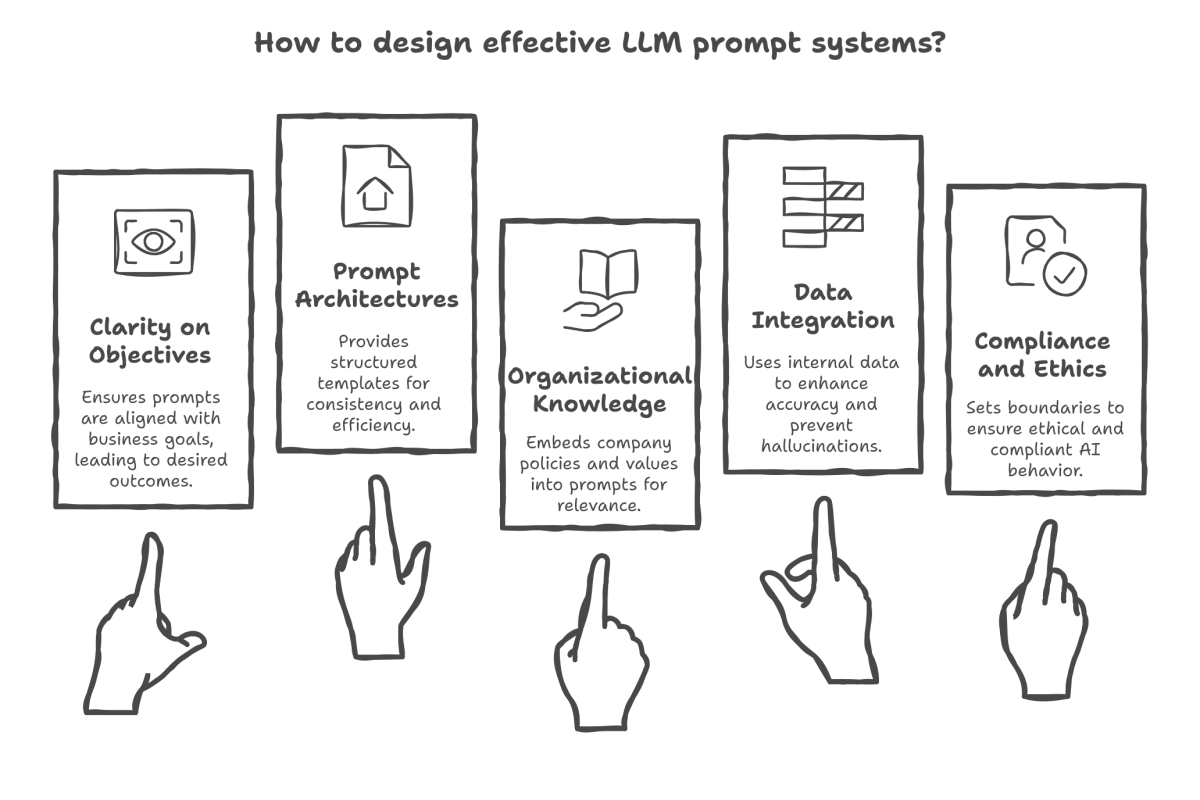

A good prompt strategy starts with clarity on business objectives. What outcome do you need from the AI: A correctly classified support ticket? A polite and on-brand response to a customer? A concise executive summary of a research report?

Each goal might require a differently designed prompt or even a sequence of prompts. Rather than relying on ad-hoc prompts written on the fly, companies are creating prompt architectures – structured prompt templates and libraries that correspond to their key use cases[5].

For example, you might maintain a library of prompt templates: one for “contract summarizer,” another for “customer email draft assistant,” each with language and instructions tuned to your domain. These serve as reusable building blocks.

An MIT Sloan study noted that crafting one-off prompts for each query is inefficient; instead, leading teams compile libraries of proven prompt templates that act as “cognitive scaffolding” for different tasks[6].

In plain terms, rather than reinventing the wheel every time an employee needs an AI to do something, you provide a ready-made prompt playbook. This ensures consistency and saves time. It also helps with educating staff on how to use AI – the template itself teaches them what a good prompt looks like.

Crucially, prompt design must encode your organizational knowledge and values. This is where prompts become a true business layer. You can embed context about your company’s policies, tone, and expectations directly into the prompt.

For instance, instead of a generic instruction like “Summarize this report,” your prompt can set a role and context: “You are a financial analyst assistant. Summarize this Q3 earnings report in a brief, factual style for our executive team.” Experiments have shown that adding such role context dramatically improves the relevance and quality of AI outputs[7]. By including details like the target audience (executive team) and desired tone (brief, factual), you align the AI’s output with business objectives (in this case, giving leadership only the important facts, in the right tone).

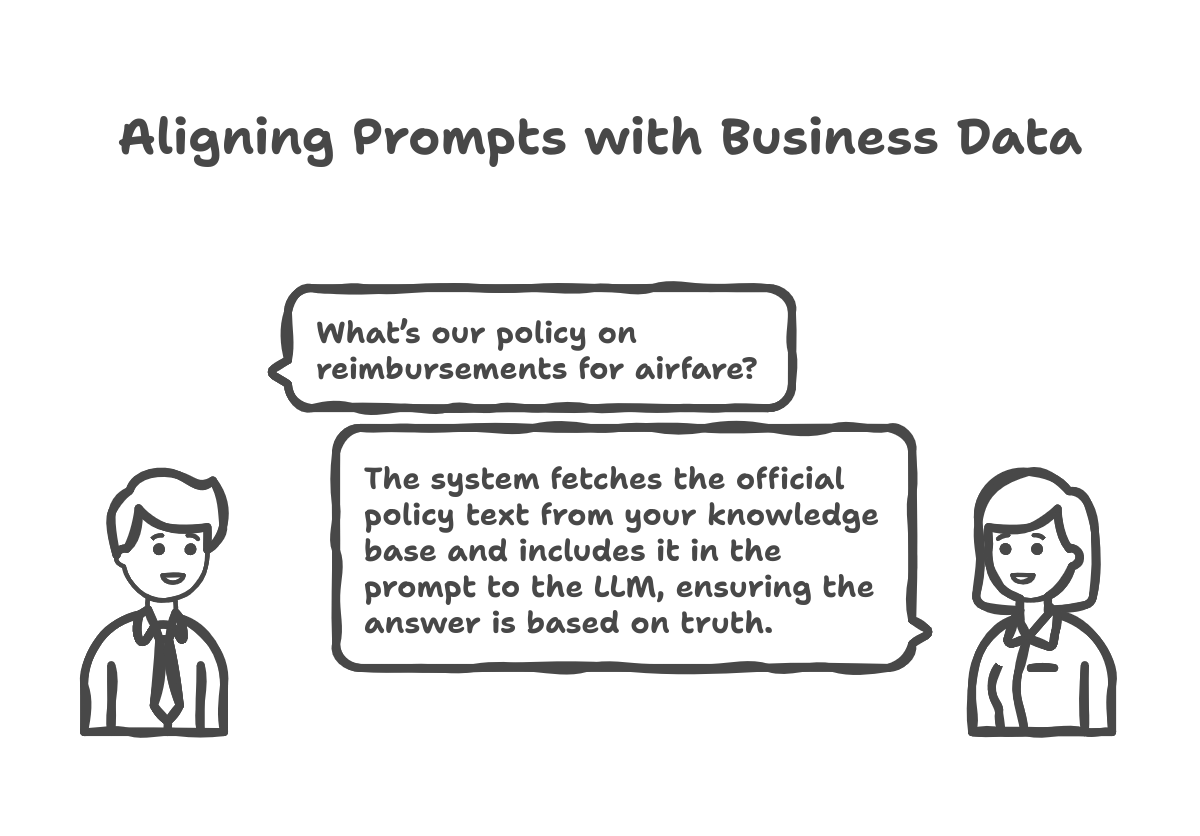

Another aspect of alignment is grounding the AI in the correct data. Out-of-the-box LLMs have general knowledge but may hallucinate or err on specifics. A strong prompt strategy uses your organization’s data to compensate for this.

Many companies are now integrating vector databases and retrieval steps into their prompt workflows. Essentially, they feed the prompt with relevant internal information whenever a query comes up.

For example, if an employee asks a chatbot, “What’s our policy on reimbursements for airfare?”, the system can first fetch the official policy text from your knowledge base (using semantic search on embeddings) and include it in the prompt it gives the LLM. This way, the answer is based on truth, not the model’s guess.

As one architecture guide explains, if a user asks about a specific accounting regulation, the system can vector-search the corpus of accounting rules and retrieve the exact rule text to insert into the prompt[8]. The LLM then answers with reference to that snippet, ensuring accuracy and context. Aligning prompts with business data in this manner turns the LLM into a far more reliable assistant.

It’s prompt engineering married with robust data engineering – and it prevents a lot of hallucinated answers.

Finally, alignment means compliance and ethics, too. If you operate in a regulated industry or have strict brand guidelines, your prompts should reflect that.

A prompt strategy might involve system prompts (initial instructions given to the model) that explicitly set boundaries: e.g. “The assistant should not provide financial advice” or “Use a friendly, professional tone that matches our brand voice.” These upfront instructions act like guardrails. They ensure the AI stays in its lane, much as a code library might enforce security or logging by default.

In short, designing prompt architectures aligned with company goals requires weaving in domain context, data references, and policy guidelines into the very text that guides the AI.

From Modular Prompts to Prompt Libraries (Examples in Practice)

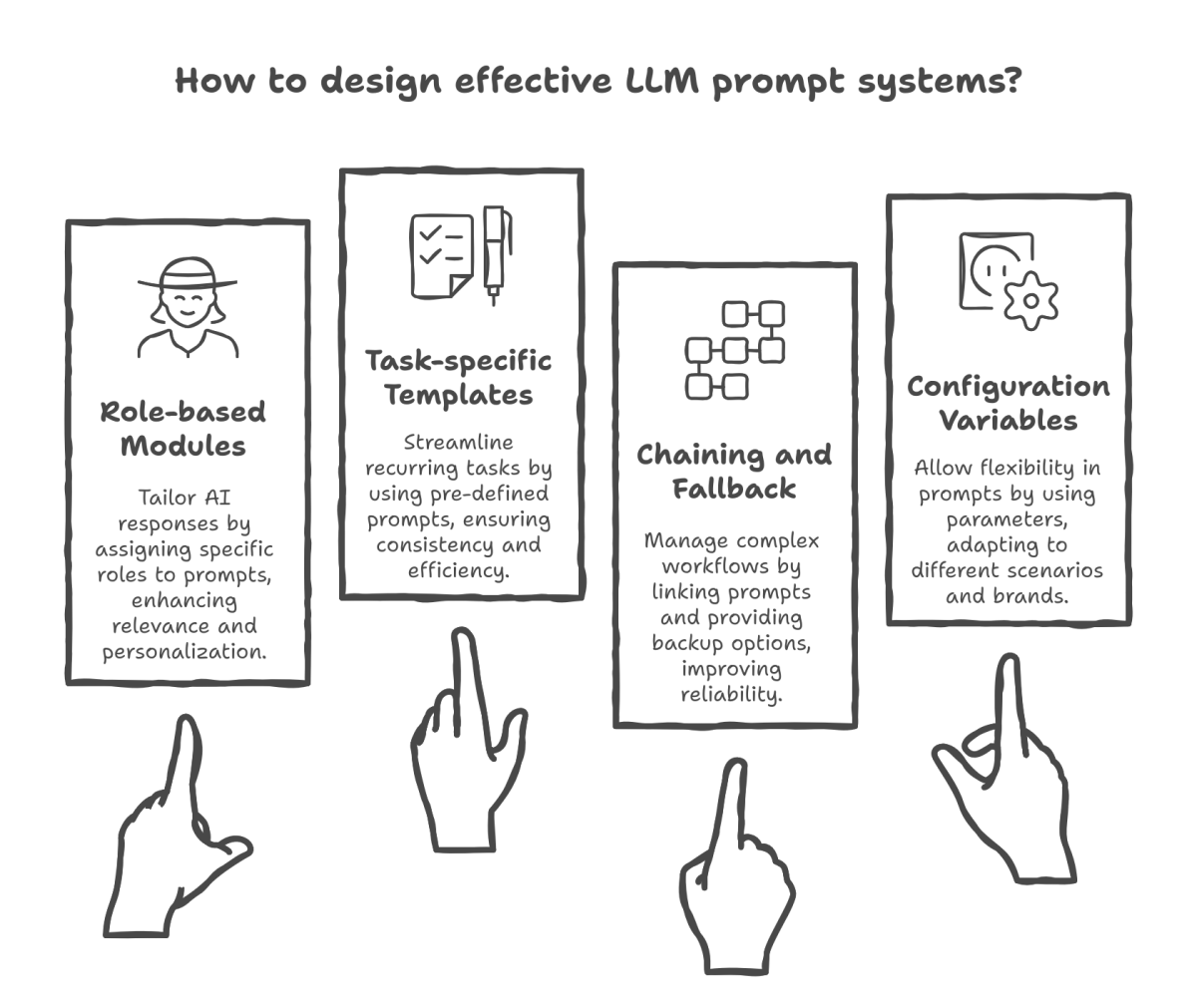

To make this concrete, consider how an enterprise might implement modular prompt components. Rather than a gigantic prompt trying to handle everything, leading practitioners break prompting into pieces that can be mixed and matched[9]. For instance:

1) Role-based prompt modules

You create a module for the AI’s persona or role. e.g. “You are an HR Assistant specialized in company policy.” This can be prepended to various prompts for HR queries. Another module could be “You are a SaaS product onboarding expert speaking to a non-technical customer.” Swapping personas helps the AI tailor its language appropriately.

2) Task-specific templates

As mentioned, you maintain templates for recurring tasks. If marketing needs taglines, you have a prompt that says “Generate 5 tagline options for [product], each under 8 words, focusing on [value proposition].”

If legal needs contract summaries, you have a prompt that says “Summarize the following contract clause and identify any liabilities or deadlines, in bullet points:” followed by the clause text.

3) Chaining and fallback

Sometimes one prompt isn’t enough. You might chain prompts (the output of one becomes input to another) for complex workflows.

For example, a modular chain might first prompt the AI to extract key data from a document, then feed those results into a second prompt that produces a summary or recommendation. Modular design means each prompt in the chain is scoped to a sub-task (improving reliability).

If one step fails, you can handle it (maybe have a simple rule-based fallback or human review). This is akin to breaking a big function into smaller, testable functions in code.

4) Configuration variables

You might allow certain prompts to accept parameters. For instance, a prompt template for customer email replies could have a variable for “tone” that can be set to formal or friendly.

The template might look like: “You are a support agent. Reply to the customer with a {tone} tone: [customer message here]”.

This way, the same base prompt can serve multiple brands or scenarios by plugging in a different tone or other variables. This prompt parametrization supports consistency while allowing flexibility where needed.

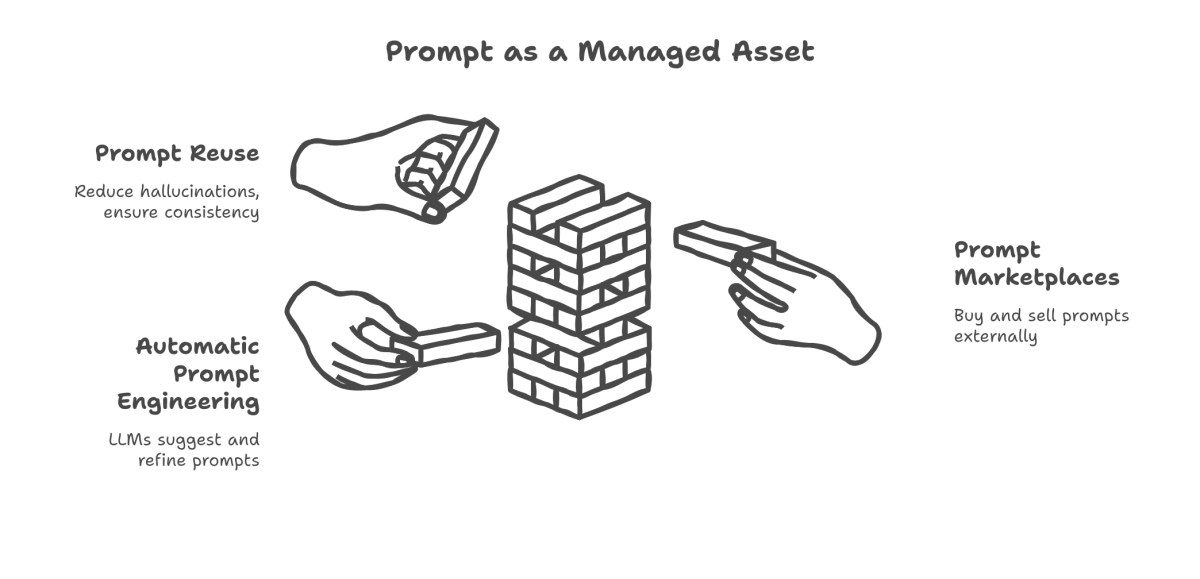

Reuse And Consistency by Implementing Prompt Libraries

By implementing prompt libraries and modular components, organizations gain reuse and consistency. A Symphonize industry report noted that companies are creating prompt libraries tied to business tasks (e.g. a “contract summarizer v3” prompt that multiple teams can leverage).

These libraries often include version numbers, documentation, and examples – very much like code libraries. The benefit is twofold: (1) Speed to market – teams don’t start from scratch every time they add an AI feature; they pull from the library. (2) Quality control – prompts in the library can be vetted by domain experts and compliance officers ahead of time.

As a result, prompt reuse helps reduce AI hallucinations and off-brand outputs while ensuring different parts of the organization get consistent results[10].

Even beyond internal libraries, we’re seeing the rise of prompt marketplaces where prompts themselves are bought and sold (much like APIs or software components).

It’s early days, but the fact that a marketplace like PromptBase exists highlights that prompts are becoming standalone assets. Some LLMs can even suggest and refine prompts for you – so-called “automatic prompt engineering.”

In the near future, organizations might source prompts externally the way they license software, then adapt them to their needs[11]. All of this reinforces the idea: a prompt is not just an input – it’s an asset that can be created, improved, shared, and managed.

Testing, Auditing, and Evolving Prompts

Because prompts play such a critical role, treating them as a living product means implementing continuous improvement and governance practices. You wouldn’t deploy a piece of software to thousands of users without testing it – the same goes for prompts that will generate content or decisions at scale. What does prompt testing and auditing look like in practice?

Versioning and change management

Maintain prompts in a version control system (even if it’s just a disciplined document or repository). This way, when you tweak a prompt to improve it, you have a record of what changed and why (e.g., “v2 – added bullet point format in instructions to improve consistency”).

Some companies even assign owners to important prompts (say, the “AI Sales Email Draft” prompt) who are responsible for its maintenance. This is akin to owning a microservice. As one report put it, “mature orgs are now versioning prompts like code” to track improvements[12].

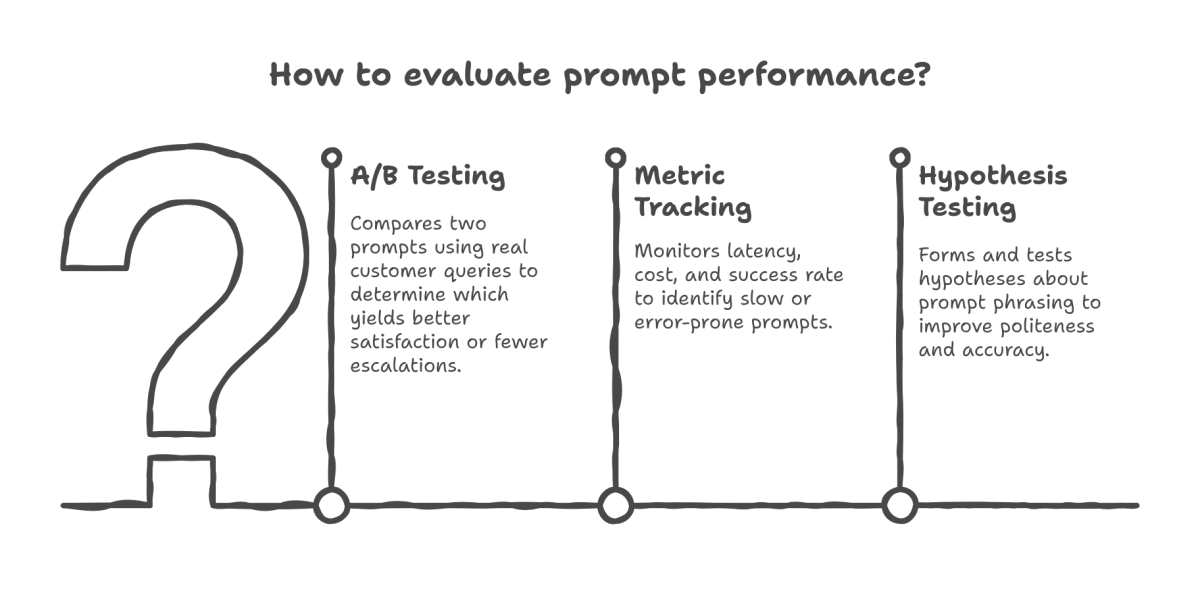

Prompt evaluation and A/B testing

Whenever possible, evaluate prompt performance with real or simulated inputs. For example, if you have two candidate prompts for a customer support chatbot, run an A/B test: have each prompt respond to a sample of real customer queries and then compare outcomes. Which prompt yields higher customer satisfaction or fewer escalations?

There are emerging tools to facilitate such tests, and even open-source frameworks (like promptfoo or OpenAI’s Evals) that let you define evaluation criteria. The key is to treat prompts scientifically: form a hypothesis (“Prompt phrased this way will be more polite without losing accuracy”), then test it with data. This can be as simple as a weekly review of outputs. Importantly, track metrics that matter – e.g. accuracy of the answer, or ratings from users on whether the AI was helpful.

Some organizations track prompt-level metrics such as latency (how long the prompt takes to process), cost (if using an API with token billing), and success rate[13]. By logging these, you might discover that a certain prompt is very slow or prone to errors, prompting you to refine it.

Monitoring and “prompt ops”

Once a prompt is live in production (e.g., powering a chatbot or generating content in an app), set up monitoring just as you would for any service. If the AI’s outputs start drifting (say it suddenly gives more irrelevant answers), you need to know and intervene.

Some teams implement prompt observability – tooling that records inputs and outputs so you can audit them later. For instance, you might log every time the AI couldn’t answer and had to fall back to a human, then review those cases to see if adjusting the prompt could have helped. Without telemetry, your AI can become a black box; with it, you gain insight into failure modes[14].

Regular audits (possibly with humans in the loop) of a random sample of AI outputs is another good practice, ensuring that any rogue behavior is caught early.

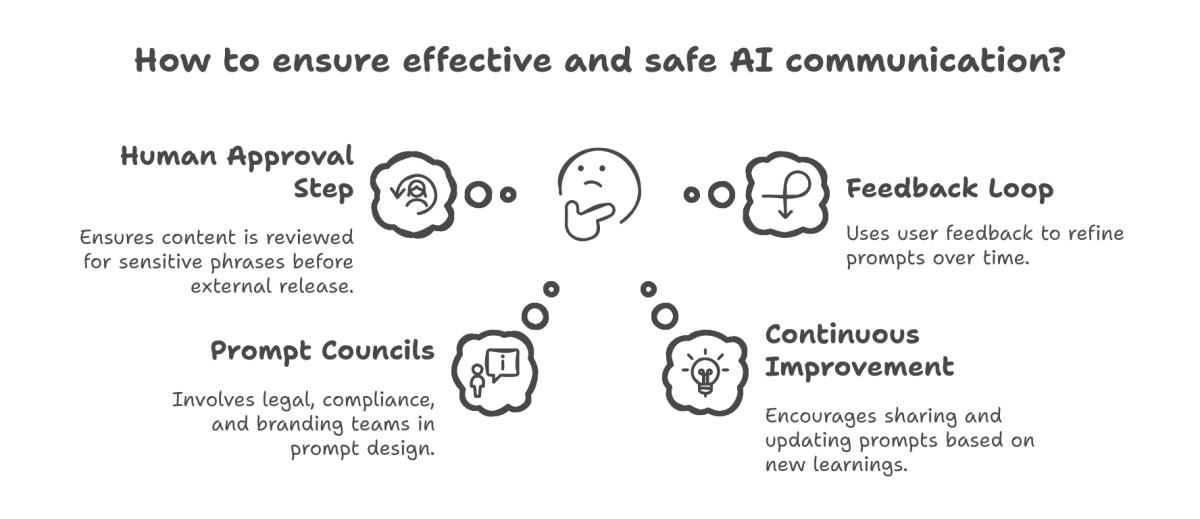

Governance and approval workflows

Particularly for content that goes to external audiences (customers, the public), you may want a human approval step or strict guidelines. Your prompt strategy can include automatic checks – for example, if an AI-written email contains a certain sensitive phrase, maybe flag it for review.

Some enterprises set up prompt councils or include prompt design in existing content review boards. This ensures that legal, compliance, or branding folks have input on how the AI communicates. It might feel bureaucratic, but it’s better than a prompt inadvertently causing a PR issue because nobody realized the AI could respond a certain way.

Keep in mind that prompt engineering is a fast-evolving field. What works today might be improved tomorrow as we learn more. A culture of continuous improvement is key. Encourage teams to share “prompt learnings” in internal wikis or meetings. Maybe one team discovered a format that reliably reduces model hallucinations – that knowledge should spread.

Also, stay updated with external best practices. (For example, Google’s 2025 prompt engineering whitepaper distilled dozens of such tips and might inspire ideas[15][16].) The bottom line is that prompt strategies are not set-and-forget. They require ongoing tuning as your models, data, or objectives change.

Build a feedback loop: as the AI generates results, collect user feedback and use it to refine prompts. Over time, you’ll cultivate prompt systems that are robust and trusted, much like mature software.

Tools and Methods for Scalable Prompt Engineering

Implementing the above might sound daunting, but fortunately a variety of tools have emerged to support prompt engineering workflows:

Prompt management platforms

Several startups and open-source projects offer interfaces to design, test, and catalog prompts. These act like an IDE for prompt engineers. You can simulate conversation prompts, see how different models respond, and organize prompts in folders (e.g., by department or product).

Vector databases and knowledge integration

We discussed using vector stores to provide context. There are dedicated solutions (Pinecone, Weaviate, etc.) that make it easier to plug your proprietary data into LLM prompts.

Many LLM orchestration frameworks (like LangChain, LlamaIndex) provide out-of-the-box connectors to do a “retrieve-and-read” pattern: fetch relevant text and append to prompt. This abstracts some technical complexity so prompt designers can focus on what to ask rather than how to fetch data. In strategic terms, investing in a solid enterprise knowledge index will supercharge your prompt’s effectiveness, as the AI can draw on up-to-date internal facts rather than generic training data.

Guardrail libraries

One challenge with natural language prompts is controlling for unwanted outputs (e.g., toxic language or confidential info leaking). To address this, tools like Microsoft Guidance, Hugging Face’s Guardrails, or NVIDIA NeMo Guardrails let you define rules that intercept or adjust the LLM’s outputs.

For instance, you can specify “if the output mentions a competitor’s name, rephrase or redact that sentence.” These guardrail frameworks act as a safety net around your prompts.

A robust prompt strategy often pairs prompt design with such automated moderation and validation steps, especially in enterprise settings[17][18]. Think of it as having a co-pilot watching the AI’s answers and correcting course if it goes off-policy.

Evaluation frameworks

As mentioned, systematic evaluation is important. OpenAI released an openai-evals library for creating evaluation sets. Academic benchmarks like HELLASWAG or TruthfulQA can be used to test models on reasoning or truthfulness respectively.

There are also “LLM judge” approaches where you use a strong model to evaluate outputs from another (e.g., GPT-4 scoring the quality of responses). These can help scale evaluation when human review is expensive.

The field of LLM evaluation is rapidly growing – expect more standardized metrics for things like factuality, helpfulness, etc., which you can integrate into your prompt testing pipeline.

Prompt marketplaces and community forums

While not a traditional “tool,” the community can be an asset. Platforms where prompt engineers share tips or even prompt templates can spark ideas for your use cases. Just remember to vet and adapt any externally sourced prompt to fit your proprietary context (and to avoid inadvertently exposing sensitive info).

Treat prompt engineering as an ongoing discipline supported by proper tooling. Just as DevOps teams use CI/CD, monitoring, and version control, your “PromptOps” (yes, people are calling it that) should use the right mix of design tools, databases, and guardrails to manage prompt life cycles.

The exciting part is that when done well, a prompt strategy can turn a generic AI model into a specialized problem-solver that acts in tight alignment with your business needs and values.

Five Strategic Takeaways

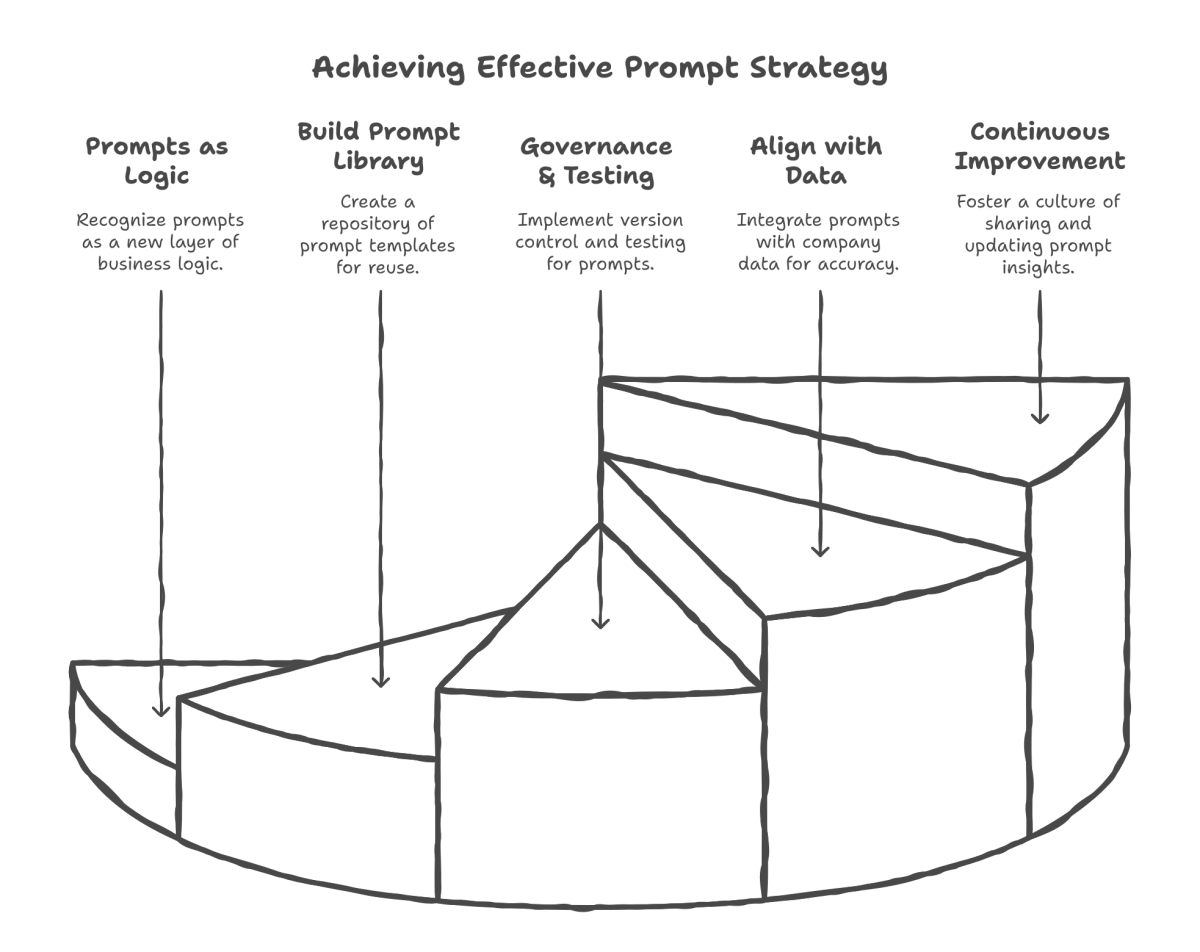

1) Prompts = Business Logic

Don’t view prompts as throwaway phrases. They are a new layer of business logic. Invest time to design prompts that encapsulate your policies, terminology, and goals. A well-structured prompt can drastically improve an AI system’s usefulness and consistency.

2) Build a Prompt Library

Create a repository of prompt templates and modular components for common tasks. This encourages reuse and standardization. Leading firms use prompt libraries (with versioned “prompt blocks”) to ensure different teams leverage proven best practices rather than starting from scratch[5][6].

3) Governance and Testing

Treat prompts like code in production. Establish prompt version control, run A/B tests or evaluations on prompts, and monitor outputs continuously. Incorporate feedback loops so prompts are refined based on real-world results. Without telemetry and oversight, you’re flying blind.

4) Align with Data and Compliance

Augment prompts with relevant context from company data (via vector databases or APIs) to ground answers in truth[8]. Also embed guardrails in your prompt strategy – both in prompt wording (e.g. “stay formal,” “don’t mention XYZ”) and via automated filters – to ensure AI outputs meet your compliance and ethical standards.

5) Continuous Improvement Culture

Encourage a culture of sharing prompt engineering insights across the organization. This field is evolving quickly. What counts as an optimal prompt today might be outdated next year. Stay abreast of emerging techniques (from research and industry) and be ready to update your prompt playbook. In many ways, prompt strategy is now a core competency for AI-driven businesses – nurture it accordingly.