The surge of advanced AI – from predictive analytics to generative models – has made “AI strategy” a boardroom imperative rather than a buzzword. Yet many companies still struggle to move beyond piecemeal pilots to enterprise-scale AI impact.

Recent research shows that almost all companies are investing in AI, but only about 1% feel they have fully matured deployments integrated into workflows. In other words, the limiting factor isn’t AI technology – it’s the strategy and leadership needed to harness it.

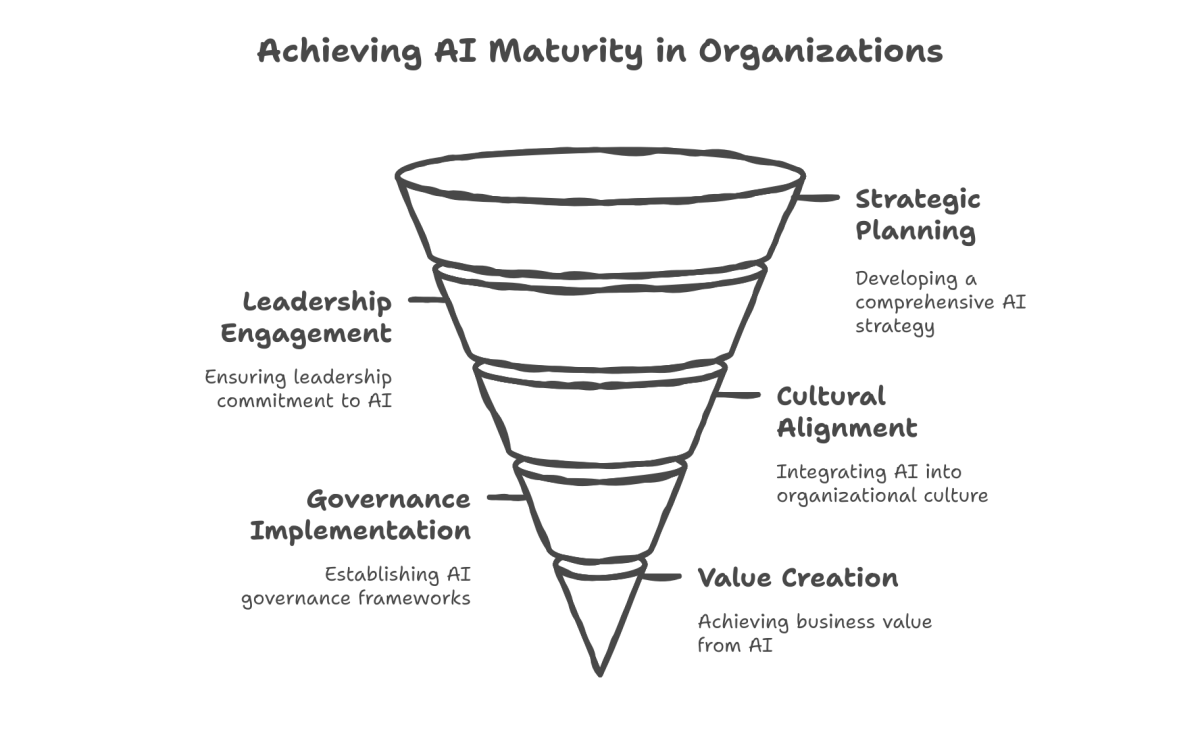

A modern AI strategy spans far more than picking models or data; it’s a comprehensive plan aligning AI capabilities with business goals, governance, culture, and value creation.

This article explores…

How organizations can craft and execute such an AI strategy, from assessing AI maturity to building internal centers of excellence, navigating regulations, and choosing the right build-vs-buy mix. The goal is to provide CIOs, CTOs, and transformation leaders – especially in the Nordics – with implementation-ready insight into turning AI from experiment into enterprise advantage.

“The true barrier to AI adoption isn’t technical – it’s a lack of leadership alignment on AI strategy, investment, and risk management.”

McKinsey (2025 survey)[2][4]

Beyond Models and Data: What Constitutes a Modern AI Strategy

An effective AI strategy starts with a fundamental mindset shift: recognizing AI as a business transformation enabler, not just a technology project.

This means moving beyond ad-hoc model deployments to a holistic plan that ties AI initiatives directly to the organization’s mission, KPIs, and workflows. Leadership vision is crucial – McKinsey’s 2025 workplace AI report found that employees are largely ready to embrace AI, but leaders moving too slowly is the biggest barrier to scaling[5]. Successful AI-driven companies treat AI projects not as isolated IT experiments, but as part of core business strategy driven from the top.

Modern AI strategy encompasses several dimensions: business alignment, organizational readiness, and continuous learning. Rather than asking “Which algorithm should we use?”, leaders first ask “What business problems are we solving or what opportunities are we pursuing with AI?” This requires translating corporate objectives and OKRs into AI use cases.

For example, if a key goal is to improve customer retention, an AI strategy might include deploying churn prediction models and personalized recommendation engines, integrated into the customer success workflow.

Another key element is cultural and workforce readiness. AI adoption often fails not due to model accuracy, but due to lack of trust or understanding among employees. Building an AI-ready culture involves educating staff, addressing change management, and fostering an experimental mindset. Employees need to see AI as a collaborative tool, not a black box threat.

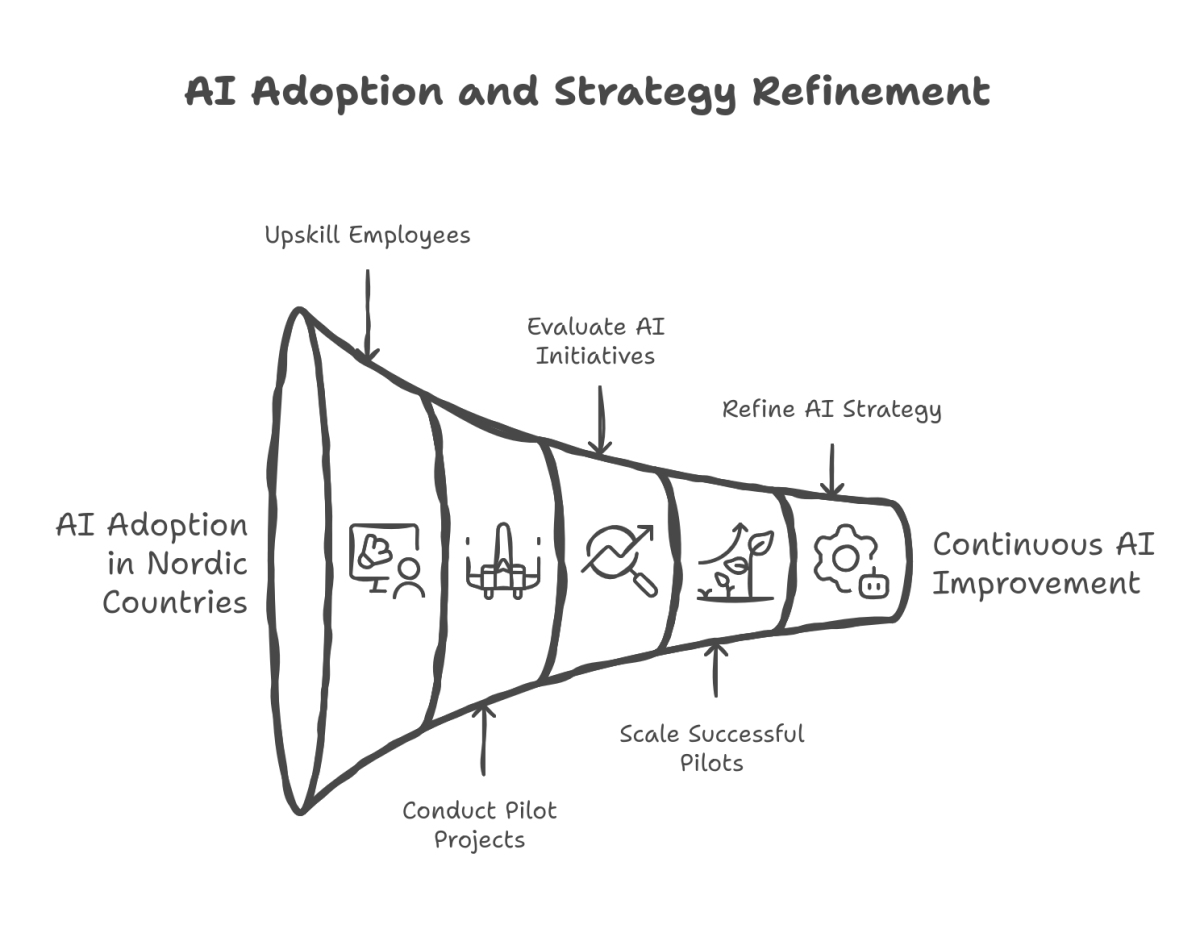

Companies like Microsoft note that Finnish firms are relatively ahead here – a recent study showed 61% of Finnish organizations already use AI, higher than peers in Norway (52%), Denmark (48%), or Sweden (45%)[6]. This early adoption reflects a Nordic culture of embracing digital tools, but also underscores the need to purposefully upskill employees and encourage cross-functional AI literacy as part of strategy.

Many enterprises now run AI training programs, internal AI academies, and pilot projects to build comfort and skills across business units.

Crucially, AI strategy is iterative. Just as business strategy is revisited regularly, AI plans should evolve with new technologies and lessons learned. Agile governance – where AI initiatives are evaluated and adjusted on a rolling basis – helps organizations avoid being locked into yesterday’s approach.

The strategy must account for scaling successful pilots and pruning those that don’t show value, creating a feedback loop of continuous improvement.

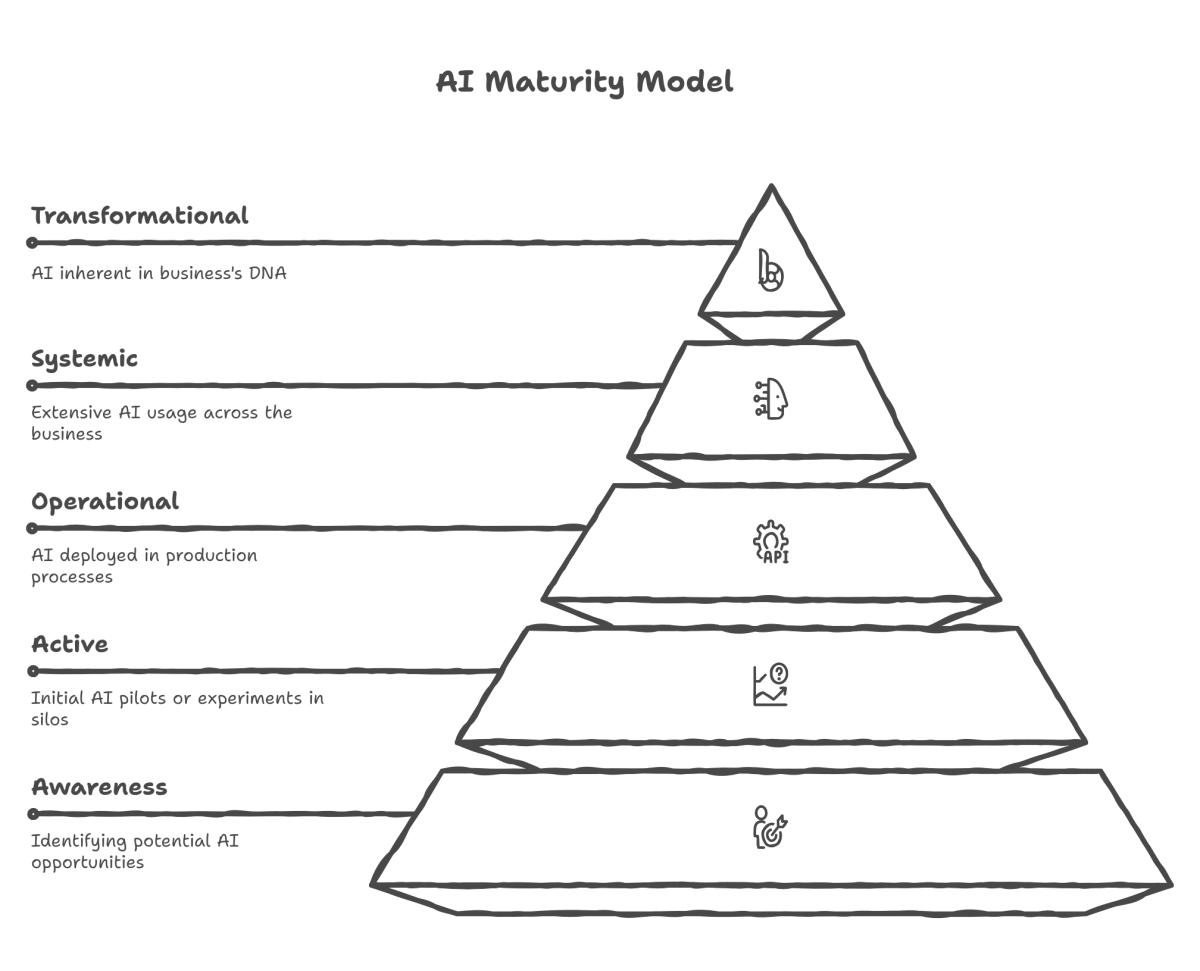

AI Maturity Models: Assessing Organizational Readiness

How does an organization know where it stands on the AI adoption curve? This is where AI maturity models come in handy. Frameworks by Gartner, McKinsey, and others provide a staged view of AI capability – from nascent experimentation to transformative, AI-driven business models. Gartner’s AI Maturity Model, for instance, defines five levels[7]:

- Level 1 – Awareness: AI is on the radar but not actively used; organizations are identifying potential opportunities[9].

- Level 2 – Active: Initial pilots or experiments exist, often in silos; there’s interest but no comprehensive strategy yet.

- Level 3 – Operational: A defined AI strategy is in place and AI is deployed in production processes to drive outcomes; focus shifts to performance measurement and optimization.

- Level 4 – Systemic: AI usage is extensive and pervasive across the business, supported by an innovative culture; AI is embedded in all key workflows, driving efficiency and new value creation[12].

- Level 5 – Transformational: AI is inherent in the business’s DNA, continuously used to reinvent products and even business models; the company is seen as an AI leader disrupting its industry[13].

Most firms today find themselves in the lower to middle levels. In fact, only about 9% of companies reach the “Transformational” level 5 where AI is part of the core DNA[14]. Boston Consulting Group’s 2024 global survey similarly found just 4% have achieved “cutting-edge” AI capabilities enterprise-wide, while another 22% are in the process of scaling and seeing substantial gains – the rest (74%) have yet to realize tangible AI value at scale[15].

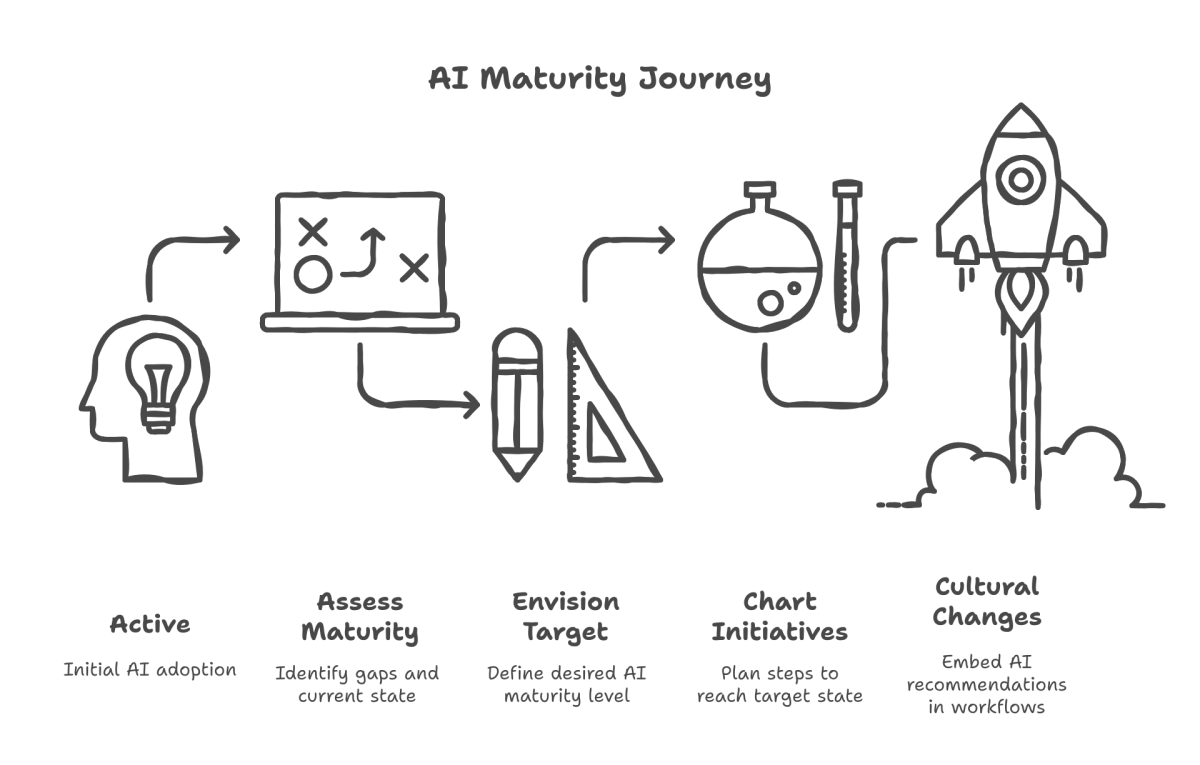

These statistics underscore that most organizations are still in the early innings of the AI journey. The maturity model provides a roadmap: if you’re at Level 2 with scattered pilots, the next step is to formulate a unified AI strategy and start integrating AI into key operations (Level 3).

How can leaders use this?

First, assess current maturity – perhaps via internal audit or third-party evaluation – to pinpoint gaps. Is there an AI strategy at all? Are projects aligned with business goals? Are data infrastructure and talent in place? These questions reveal whether you’re merely “Active” or truly “Operational.”

Next, envision the target state (e.g. reaching Level 4 systemic usage in 3 years) and chart initiatives to get there: maybe building a central AI platform, establishing data governance, or recruiting needed skills. The maturity model also highlights that progress isn’t only technical – cultural and process changes (for instance, re-engineering workflows to embed AI recommendations) are what elevate an organization to higher levels[17].

Notably, BCG found AI leaders devote 70% of their effort to people and processes (vs. 10% on algorithms), confirming that human factors outweigh tech once basic capabilities are in place.

Aligning AI with Business Goals, Workflows and Culture

A cornerstone of AI strategy is ensuring that AI efforts directly support and enhance the core business strategy and operations. Too often, companies fall into the trap of “AI for AI’s sake” – launching flashy projects that don’t move the needle on real business outcomes. To avoid this, organizations should establish clear mechanisms to align AI projects with business units, workflows, and objectives from the outset:

- Joint AI-Business Planning: Leading firms set up cross-functional committees or working groups where data scientists and business unit leaders co-create AI use cases. Each potential AI initiative is evaluated on how it ties to a business priority or pain point. For example, a Nordic bank might convene its retail banking executives with its AI team to identify how AI can improve loan processing or risk scoring. By doing this collaboratively, AI solutions are naturally woven into business processes rather than operating in isolation.

- OKR and KPI Integration: It helps to link AI project metrics with the company’s existing objectives and key results (OKRs). If a company’s objective is “increase customer satisfaction by 20%,” an AI-powered customer service chatbot project should then be measured by metrics like issue resolution time or CSAT scores, demonstrating its contribution to that goal. In 2024, nearly 80% of companies were increasing AI investments, but many lacked a clear vision for implementation across the organization[19] – aligning to OKRs provides that vision in concrete terms and prevents aimless experimentation.

- Workflow Embedding: The true test of alignment is when AI tools become a seamless part of daily workflows. This often requires process re-engineering. For instance, if R&D scientists have an AI system for drug discovery, the workflow might be redesigned so that AI-generated molecule suggestions feed directly into the lab testing pipeline. Or in manufacturing, predictive maintenance models should trigger automatic service tickets in the maintenance workflow. Embedding AI into the flow of work ensures adoption and value realization. McKinsey’s research suggests companies should focus on practical applications that empower employees in daily jobs to create competitive moats and ROI, rather than AI in a vacuum[20].

- Cultural Alignment: Lastly, an AI strategy must resonate with the company culture and change-management capacity. If a company prides itself on data-driven decision making, an AI strategy can lean into that by accelerating analytics and insights. If the culture is more risk-averse, leaders may need to emphasize pilot testing, transparency, and incremental wins to build trust in AI. One insightful finding is that employees are often more ready for AI than leaders realize[21], with many already using tools like ChatGPT in their work. Tapping into this grassroots readiness by gathering employee input and highlighting internal AI “champions” can further align the AI strategy with the people on the ground. Ultimately, cultural buy-in is achieved by communicating a clear vision of “AI augmenting our capabilities” and celebrating early wins where AI helped teams achieve something notable.

A case in point is Finland’s OP Financial Group (a major bank), which began its AI journey in 2017. They introduced an AI chatbot for customers and an internal AI assistant for employees, not as tech gimmicks, but to directly streamline customer service and employee productivity.

By tying these tools to tangible workflow improvements (faster customer query resolution, easier internal knowledge access) and educating staff, OP embedded AI into its culture of innovation.

The result: higher adoption and measurable boosts to service quality and efficiency[23].

Governance, Risk and Compliance: Navigating the EU AI Act, GDPR, and Ethics

No AI strategy is complete without a robust approach to governance and risk management, especially given the rapidly evolving regulatory landscape.

In the EU and Nordic context, this is paramount – regulations like the EU’s AI Act and GDPR set clear expectations for responsible AI use. Rather than seeing compliance as a checkbox, savvy organizations treat it as a strategic pillar that protects the business and builds trust.

EU AI Act

Adopted in June 2024, the EU AI Act is the world’s first comprehensive AI law, and it will be fully applicable by 2026 (with some provisions starting earlier)[24]. The Act mandates a risk-based framework – AI systems are classified by risk levels (unacceptable, high, limited, minimal) with corresponding requirements.

For example, AI deemed “unacceptable risk” (like social scoring of citizens or real-time biometric ID for surveillance) will be banned outright in the EU[27]. “High-risk” AI (e.g. AI in healthcare, hiring, critical infrastructure) will require strict oversight: pre-market conformity assessments, documentation, transparency, and ongoing monitoring[29].

Even generative AI (like large language models) not in high-risk use must meet transparency requirements – e.g. disclosing AI-generated content and preventing unlawful outputs.

Strategic implication

Companies must inventory their AI use cases and map them to these risk categories. An AI strategy in 2025–2026 needs to include a compliance roadmap: ensuring that by the time the law is in force, all AI systems in use (or procured from vendors) have the necessary documentation, human oversight, and safeguards.

Forward-looking organizations are already performing AI impact assessments and setting up AI oversight committees to be ahead of these rules.

GDPR and Data Privacy

The EU’s General Data Protection Regulation (GDPR) remains a crucial factor wherever AI touches personal data. GDPR requires that personal data is processed lawfully, for defined purposes, and with consent or other legal basis.

This means if you’re deploying an AI model on EU customer data, your strategy must enforce privacy-by-design: for instance, anonymizing or minimizing personal data fed into models and obtaining user consent for AI processing where required.

It also gives individuals rights like data deletion – which poses a challenge for AI, since deleting data that trained a model may require retraining the model[32]. Strategically, many companies are investing in privacy-preserving techniques as part of their AI approach: synthetic data generation, federated learning, and differential privacy are emerging tools to allow AI training without exposing real personal data.

By incorporating these techniques, organizations can both comply with privacy laws and gain a competitive edge in being trusted data stewards.

In the words of a Cisco AI strategy blog…

“Enterprises that innovate while staying ahead of regulations will have a competitive advantage, as early compliance builds consumer and partner trust[35].”

Bias and Ethics

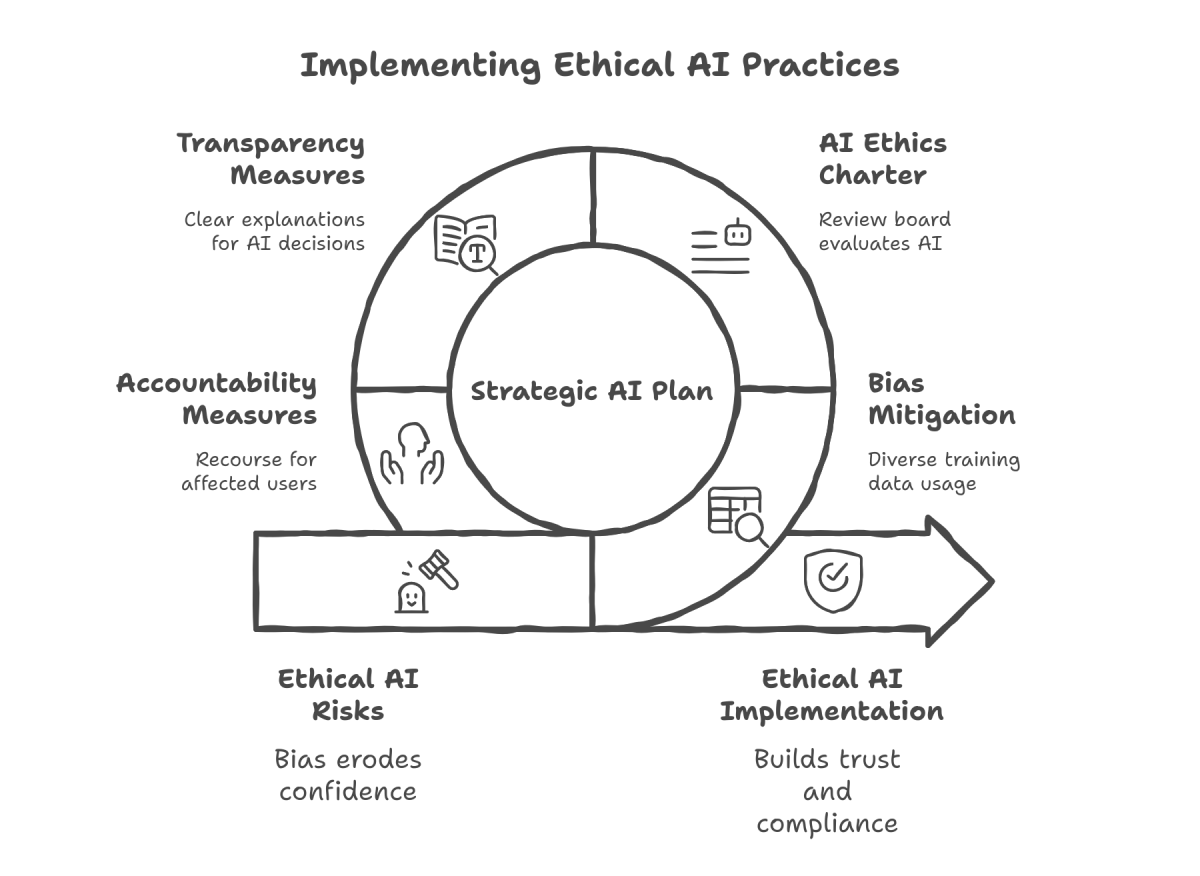

Ethical risks – such as bias in AI decisions or lack of transparency – can erode employee and customer confidence and invite regulatory scrutiny. A strategic AI plan should therefore include bias mitigation and auditability measures.

Encouragingly, surveys show over 90% of companies claim good progress on managing bias in models[36], often by using techniques like diverse training data, bias testing, and human review for sensitive use cases. Strategies might formalize this via an AI ethics charter and review board that evaluates high-impact AI systems for fairness and alignment with company values.

The Nordic perspective often emphasizes transparency and accountability – for example, Finland’s government has promoted AI ethics guidelines and open data initiatives to ensure AI benefits society.

Enterprises can mirror this by providing clear explanations for AI-driven decisions (especially in areas like finance or HR) and offering recourse when users are affected by an automated decision (a requirement under GDPR for significant decisions).

AI Governance Framework

Tying these threads together, leading organizations establish an AI governance framework as part of strategy. This might include:

- Policies and Standards: Documented policies on AI development and use (e.g. requiring human-in-the-loop for certain decisions, mandating model explainability for high-stakes AI).

- Roles and Responsibilities: Assigning an AI governance lead or committee, often drawing from compliance, legal, IT, and business units. Some appoint a Chief AI Ethics Officer or expand the remit of data privacy officers to cover AI.

- Risk monitoring and Audits: Regular audits of AI systems for performance drift, bias, security vulnerabilities, and compliance. With the EU AI Act, expect external audits especially for high-risk AI. Internally, tools can log AI decisions and data usage to provide an audit trail.

- Incident Response: Protocols for handling AI “incidents” – e.g. if an AI system produces a harmful output or is found non-compliant, how will the company address it and communicate to regulators or users.

Nordic enterprises are generally proactive in this arena. For instance, many Finnish companies have embraced “ethically aligned design” in AI solutions, reflecting the region’s emphasis on trust and responsibility. This approach isn’t just altruistic – it reduces risk of reputational damage and ensures sustainability of AI initiatives.

Building AI Centers of Excellence and Cross-Functional Teams

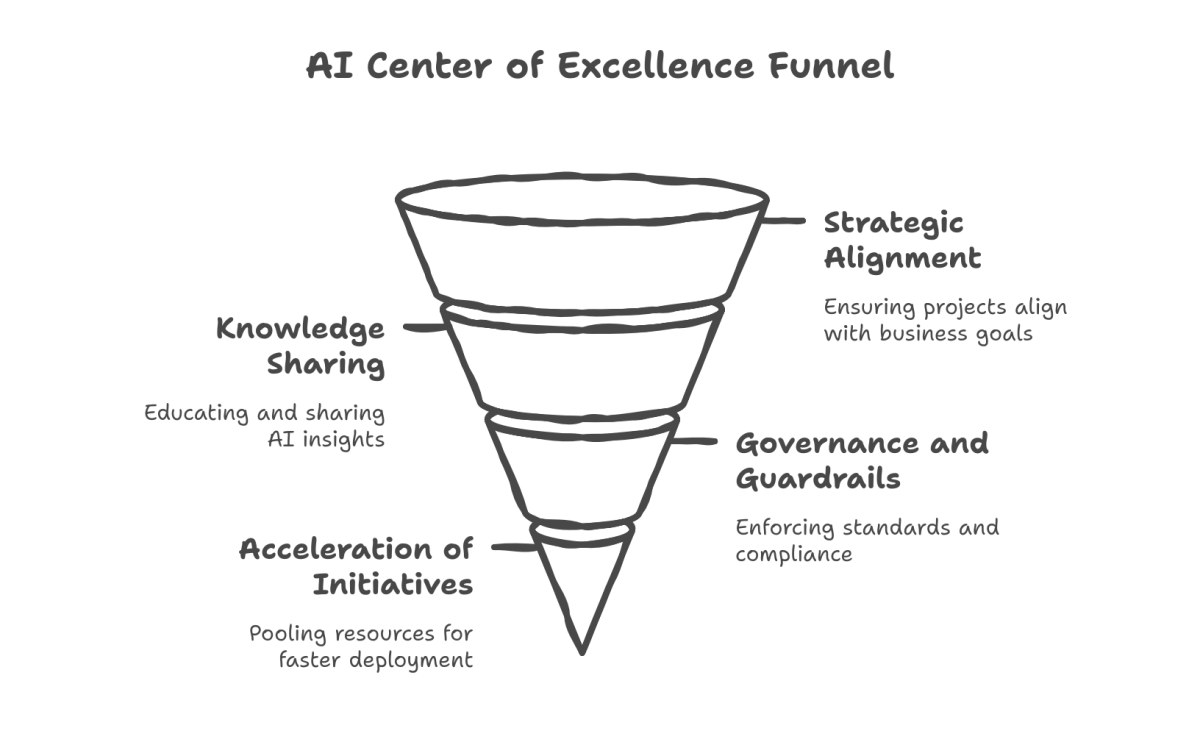

To execute an AI strategy at scale, organizations often need to build internal capacity and governance structures. One proven model is the AI Center of Excellence (CoE) – a dedicated team that centralizes AI expertise, resources, and best practices. An AI CoE acts as a hub to coordinate the company’s AI efforts, much like a nerve center ensuring all parts of the organization can leverage AI effectively.

According to IDC, the impetus for creating AI CoEs is growing as companies realize AI has become “an all-encompassing strategic initiative” and they need a way to “cut through the noise” and ground AI projects in reality[37][38].

Key benefits of an AI CoE include:

- Strategic Alignment: The CoE can evaluate and prioritize AI project proposals to ensure they align with the broader business strategy and ethical guidelines. This prevents random projects from siphoning resources.

As one AI architecture expert notes, the CoE’s goals are “to better understand AI capabilities, align AI initiatives with broader organizational strategy and ethics, build internal trust, and put governance in place early.” - Knowledge Sharing and Education: “An AI center of excellence helps cut through the hype. Its role is to educate, dispel myths, and ground AI initiatives in reality,” explains Richard Buractaon of Andesite AI[40]. The CoE brings together top talent – data scientists, engineers, but also business analysts and domain experts – who develop standards and share lessons learned across departments.

They might run an internal AI academy, publish playbooks, or provide consulting to business teams on how to implement AI. This cross-pollination is vital given the AI talent shortage; a CoE amplifies scarce expertise by spreading it organizationally. - Governance and Guardrails: A centralized team is well-positioned to enforce governance (security, compliance, quality) across AI projects. The CoE can establish model review processes, data standards, and validation protocols that every project must follow. For instance, before a new AI tool is deployed, the CoE might require a bias audit or security penetration test.

This ensures consistency and prevents rogue deployments that could cause harm. - Acceleration of AI Initiatives: By pooling resources, a CoE can build shared infrastructure (like an enterprise ML platform or data lake) and avoid redundant effort. It can also tackle foundational projects like enterprise NLP capabilities or computer vision platforms that individual units can then leverage.

Companies with AI CoEs often find they can go from pilot to production faster, because the CoE provides battle-tested pipelines and expert guidance. IDC found that many organizations with successful GenAI deployments had CoEs that addressed challenges like securing data, preventing hallucinations, and controlling costs in a centralized way[41].

Setting up an AI CoE requires executive support and the “right mix” of people. Experts advise staffing the CoE with a blend of technical and business roles[42].

For example:

- Data scientists and ML engineers to build models and manage infrastructure.

- Software engineers to integrate AI solutions into existing systems.

- Business analysts and domain experts (from finance, marketing, etc.) to ensure use cases and models are relevant and impactful.

- Project managers to coordinate efforts and ensure agile delivery.

- IT architects and security specialists to handle integration and compliance.

- Champions from business units to evangelize AI and relay feedback from end-users.

Crucially, the CoE must remain connected to the business. It’s not an ivory tower. Many companies use a “hub-and-spoke” model: the central AI CoE (hub) works closely with satellite teams or representatives in each business unit (spokes). Those reps might be upskilled by rotating through the CoE, then returning to their departments to drive AI adoption locally.

As one expert described, the CoE should “gather top employees from throughout the organization to work together, share expertise, and then bring newly gained knowledge and culture back to their original units.”[43] This ensures the AI culture permeates and that solutions fit each unit’s needs.

Finally, some organizations opt for cross-functional AI task forces or squads as an alternative or complement to a CoE. These are project-based teams that include IT, data, and business stakeholders working on a specific AI initiative (say, an AI-powered supply chain optimizer).

The advantage is tight alignment with a particular goal, though without a CoE there’s risk of siloed learnings. Many large enterprises thus use both: a central CoE for governance and shared services, plus agile squads for each major AI project.

Vendor Strategy: Buy vs. Build in the Era of AI Platforms and LLMs

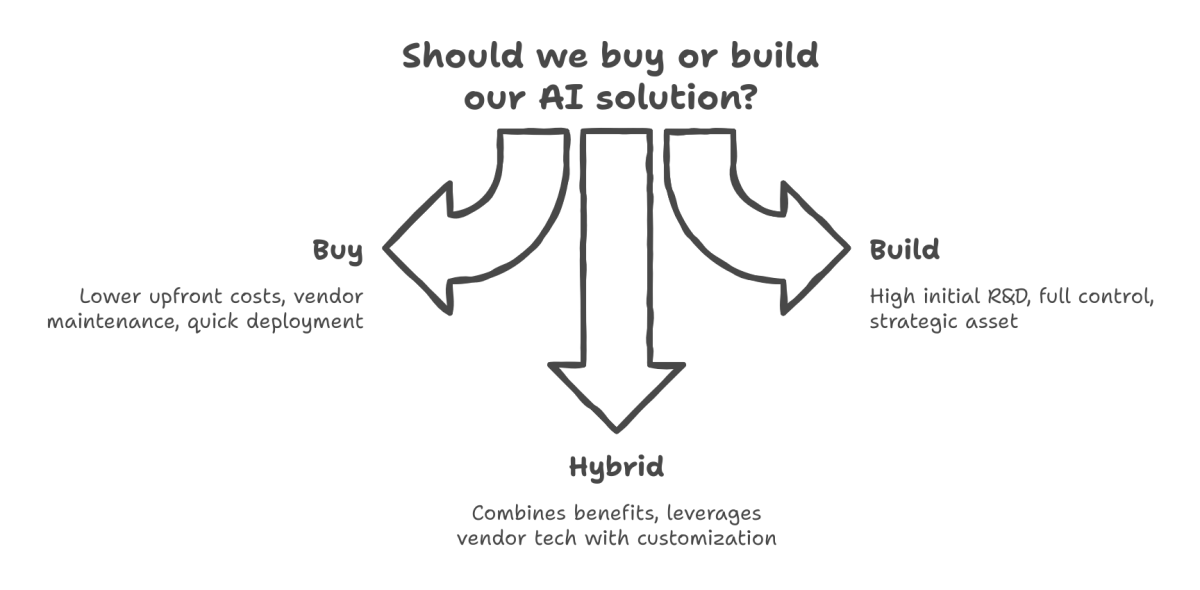

The explosion of AI capabilities (especially from big vendors and open-source communities) means organizations face an ongoing strategic choice: do we build our own AI solutions or buy existing ones (or use third-party platforms)? A sound AI strategy includes a clear vendor strategy that balances these options.

On one hand, the market is flooded with AI-as-a-service offerings, from big cloud providers (Azure, AWS, Google) to niche startups offering pre-built solutions for everything from computer vision to document processing.

Buying or licensing such solutions can dramatically speed up implementation. For example, a company could license an LLM (Large Language Model) service from a provider to power its chatbot, rather than training its own model from scratch. This might provide instant capability at the cost of ongoing fees and less control.

On the other hand, building in-house (or fine-tuning open-source models) can offer customization and potential IP advantage, but requires significant expertise and investment in data and infrastructure.

The decision is not one-size-fits-all. It depends on factors like cost, time-to-market, available talent, and strategic differentiation.

As consulting firm RSM notes:

“with the number of AI solutions growing by the day, companies can buy existing AI tools embedded in their tech stack or build custom solutions for specific objectives. Both are proven avenues for success – the right choice depends on cost, time-to-market, use case, in-house talent, and data foundations.” [44]

In other words, assess each use case through multiple lenses:

1. Speed and Complexity

If a solution is needed fast or the problem is fairly common (like invoice processing or basic image recognition), a third-party product might make sense. For highly unique problems core to your business (say, a proprietary trading algorithm or a novel product feature), building in-house could yield a competitive edge.

2. Cost and TCO

Buying often has lower upfront cost but ongoing licensing fees; building may require high initial R&D spend but lower marginal costs later. Also consider maintenance: vendor tools shift the maintenance burden outward, whereas building means you maintain it. A total cost of ownership analysis over 3-5 years can clarify the economics.

3. Talent and IP

Do you have (or can you hire) the talent to build and sustain the AI solution? If not, buying avoids a long talent ramp-up. Conversely, if AI expertise is a strategic asset you want to cultivate internally, building projects can accelerate learning.

Additionally, consider IP and data – building in-house keeps full control of your models and potentially valuable data/insights, while using a vendor may entail sharing data (raise compliance questions) or lacking model transparency.

4. Flexibility and Integration

Pre-built platforms might limit customization or integration with legacy systems. Building allows tailoring the solution exactly to your workflows and perhaps integrating with proprietary data sources more deeply. However, many modern AI platforms (including open-source LLMs) are becoming more adaptable through fine-tuning and APIs, so the gap is narrowing.

A middle path is emerging: “buy and then build upon”. For example, an enterprise might buy access to a powerful foundation model (like GPT-4 via Azure OpenAI or open-source Llama models) and then build a fine-tuned version or custom layer on top for its specific needs.

This approach leverages the best of both worlds – using vendor tech for the heavy lifting of language understanding, while customizing prompts, training on internal data, and integrating the model into internal apps. Many organizations are doing exactly this with LLM providers and vector database vendors to create bespoke generative AI applications without starting from zero.

Vendor evaluation is also a key part of the strategy. When choosing AI product vendors or cloud platforms, companies should assess:

5. Capabilities and roadmap

Does the vendor’s AI capability meet your requirements (accuracy, scalability)? Are they innovating fast – important in AI since new features (like better multilingual support or transparency tools) are emerging quickly.

6. Vendor lock-in vs. portability

Strategies increasingly favor an open architecture where possible. Using services that support open standards or allow exporting your models/data can prevent being trapped if the vendor’s costs rise or strategy diverges.

Some enterprises negotiate rights to their fine-tuned models even when using vendor services.

7. Compliance and Trust

Especially for EU/Nordic firms, ensure the vendor solution complies with GDPR and upcoming AI Act provisions – e.g. does the provider offer necessary documentation, allow audits, store data in approved regions (for data sovereignty)?

Choosing reputable vendors who prioritize AI ethics and security can de-risk your adoption.

8. Ecosystem and Support

Consider the ecosystem around the vendor – availability of skilled partners, third-party integrations, and community support. A robust ecosystem can accelerate your deployment and troubleshooting.

In short, a pragmatic mix of buy/build is often ideal. Commodity AI services can be bought to save time, while strategic AI capabilities that differentiate your business can be developed in-house or with close partner collaboration.

The strategy should also remain fluid: reassess decisions as tech evolves. For instance, what you custom-built two years ago might now be available as an affordable service – and maybe it’s time to switch, freeing your team to focus on the next innovation.

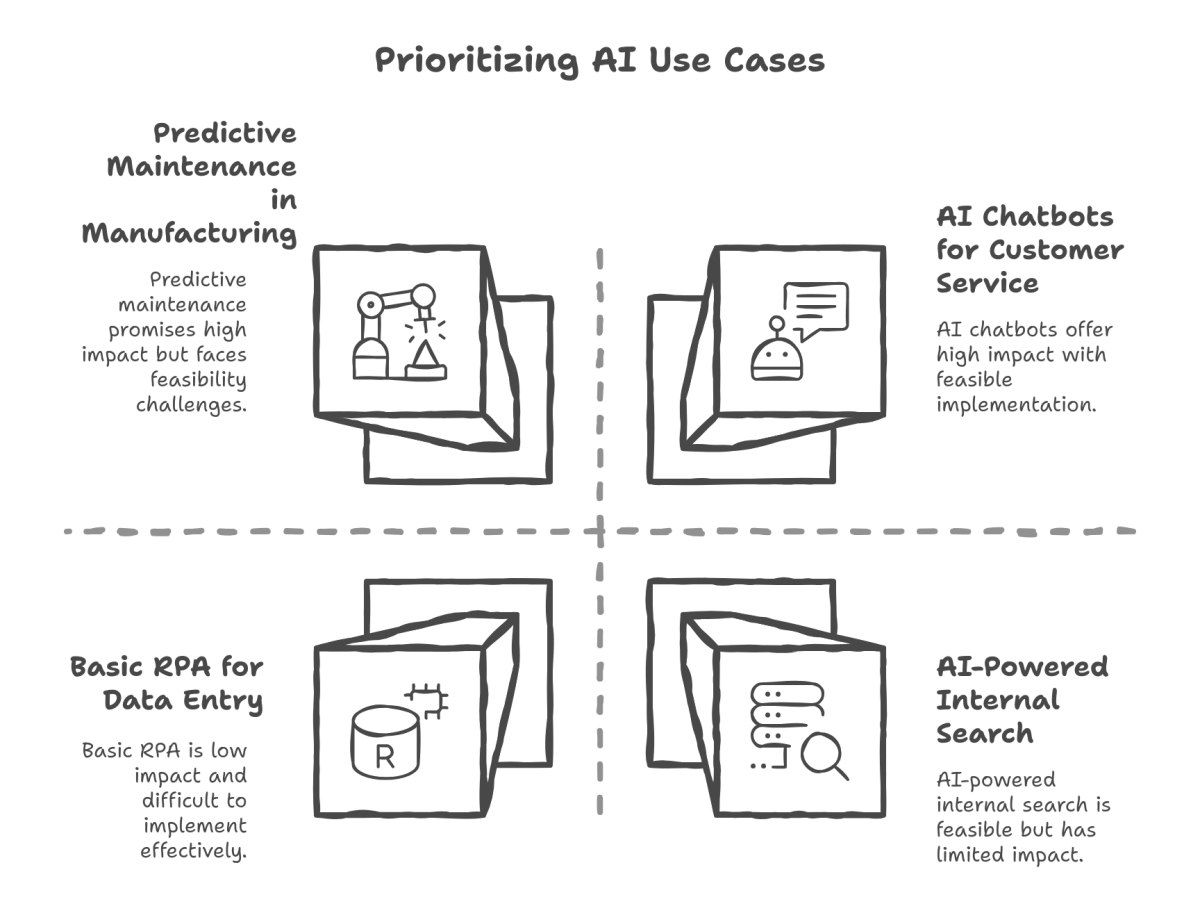

Strategic Use Cases and Quick Wins for Enterprise AI

As part of defining an AI strategy, it’s helpful to identify some high-impact use cases that can serve as pilots or quick wins – showing value early and building momentum. Common strategic use cases in enterprises include:

Customer Service & Support

This remains one of the most popular areas for AI deployment. AI chatbots and virtual agents can handle routine inquiries 24/7, improving response times and freeing human agents for complex issues. Notably:

“Conversational AI has delivered the highest ROI among AI applications for many companies.”

Survey data[45][46]

For example, Finland’s national airline or a telecom might use a multilingual AI chatbot to instantly answer customer questions, providing consistent service in Finnish, Swedish, English, etc. Additionally, AI can assist human support reps – e.g. suggesting answers or summarizing customer sentiment – to speed up resolution.

Given the high volume nature of support, even small efficiency gains translate to big savings and better customer satisfaction.

Knowledge Management and Internal Search

Every large organization struggles with siloed information. AI offers a solution through semantic search and Q&A systems that let employees query internal knowledge bases in natural language.

A great example is Cintas (a global services company) using Vertex AI Search to power an internal knowledge center, so their sales and customer service teams can easily find key information across manuals and documents[47].

By asking a question in plain language, an employee gets a concise, AI-generated answer with references – instead of hunting through intranets or PDFs. Such internal AI assistants can dramatically improve productivity and are relatively low-risk as they deal with company-specific data (where accuracy can be verified). As a quick win, companies often pilot an AI knowledge bot for IT helpdesk or HR policy questions.

R&D and Innovation

AI can augment research and development, whether through predictive models in product design, simulations, or generative AI for brainstorming solutions. For instance, AI can double the pace of R&D in certain industries by accelerating hypothesis generation and analysis[48].

In pharmaceuticals, AI might suggest drug molecule candidates; in engineering, generative design algorithms propose optimal product designs under given constraints. These use cases directly tie to competitive advantage by reducing time-to-market for innovations.

Organizations with heavy R&D should include an AI strategy for that function (many Nordic manufacturing firms, for example, use AI for predictive maintenance of equipment or to optimize supply chain logistics via AI agents that adjust plans in real-time).

Data Analytics and Decision Support

Traditional business intelligence is being supercharged by AI. An AI strategy often involves upgrading analytics from static reports to augmented analytics, where AI not only finds patterns but also provides narrative insights (“sales dipped due to X in region Y”) and even forecasts.

Moreover, “AI copilots” can assist executives and analysts by taking natural language queries and generating insights on the fly. Imagine a finance director asking an AI assistant, “Explain the key drivers of expense increases this quarter,” and getting an immediate analysis across ERP data. This moves decision-making from reactive to proactive.

Companies can start with a focused scenario – e.g. an AI that analyzes quarterly financials or one that scans market news to brief strategy teams – demonstrating how AI provides actionable intelligence beyond what manual analysis would.

Process Automation (Intelligent Automation)

Many back-office processes (in finance, HR, procurement) involve repetitive, rules-based work that AI can streamline. AI techniques like document understanding (OCR + NLP) can extract data from invoices, contracts, or emails and trigger workflows automatically.

For example, an insurance firm might use AI to scan claims and flag high-priority ones or even draft initial claim assessments. While basic RPA (robotic process automation) handles structured tasks, AI brings this to the next level by handling unstructured inputs and making context-based decisions.

Strategic use cases include automating employee onboarding paperwork, monitoring compliance (AI flagging anomalous transactions), or optimizing routing in logistics. The impact is twofold: efficiency gains and reduction of human error.

Industry-Specific Applications

Depending on the sector, certain AI use cases will align strongly with strategic goals. In banking, for instance, fraud detection and personalized customer marketing via AI are key. In retail, demand forecasting and dynamic pricing AI can increase margins. In healthcare, AI assisting in diagnostics or patient triage can improve outcomes.

A Nordic-specific example: energy companies in Finland and Sweden are using AI for predictive maintenance of the power grid and to balance supply-demand with renewable inputs. When crafting an AI strategy, list the top 3–5 use cases per business unit that could significantly boost revenue, reduce cost, or improve experience, and then prioritize by feasibility and value.

Selecting initial projects that are realistic in scope but meaningful in impact is crucial. Early success builds credibility for the AI strategy. For instance, telecom CEOs often cite network operations centers where AI predicts outages before they occur – a very tangible benefit in uptime. Such wins create internal champions and justify further investment.

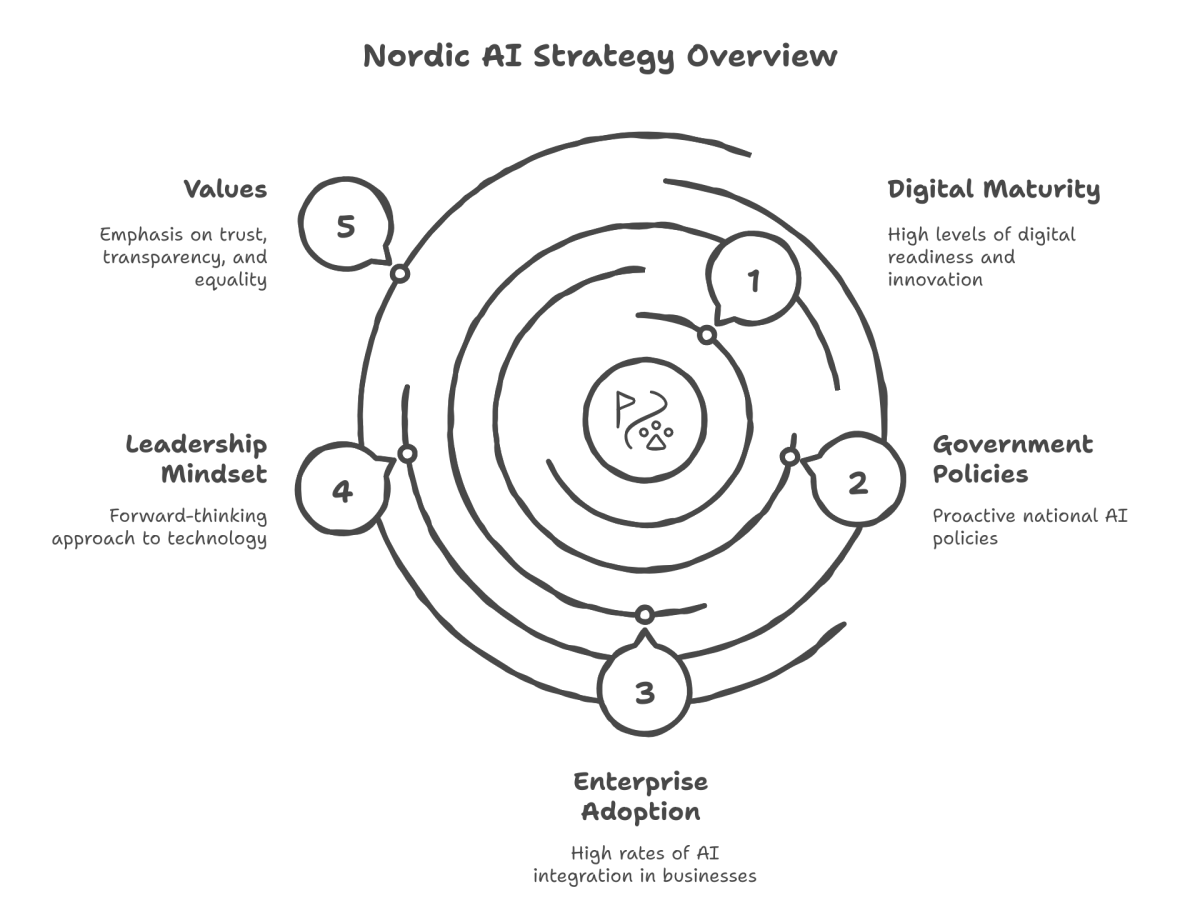

Nordic Context: Leading by Example in AI Adoption

The Nordic region, including Finland, Sweden, Norway, and Denmark, offers a unique context for enterprise AI strategy. These countries consistently rank high in digital maturity and innovation indices, and their governments have been proactive in national AI policies.

For example, Finland ranks among the top in Europe for AI adoption in enterprises – by 2024, around 24% of Finnish enterprises had incorporated some AI, a figure that jumps to 57% among large companies[49]. Separate studies (e.g. Microsoft’s) even suggest over 60% of Finnish firms are using AI in some form[6], leading the Nordics.

This environment creates both opportunities and expectations. Nordic enterprise leaders tend to have a forward-looking mindset about tech, but also a strong emphasis on trust, transparency, and equality (values reflected in regulations and public sentiment).

Therefore:

Regulation and Ethics

Nordic companies are preparing early for EU regulations and often go beyond minimal compliance. There is an emphasis on “AI that reflects our societal values.”

For instance, ensuring AI interfaces are available in local languages (Finnish, Swedish, etc.) to be inclusive, or that AI decision-making can be explained to users in human terms.

A bank in Finland implementing an AI credit scoring model might proactively engage with regulators or consumer groups to ensure fairness and avoid bias against any demographic – turning compliance into a collaborative effort that could even enhance their brand reputation.

Public-Private Collaboration

The Finnish government’s open AI challenges and investment in AI education (like the famous “Elements of AI” online course) means the talent pipeline and public awareness are relatively strong. Enterprises can tap into local AI startups and university research, which are often supported by government innovation funds.

An AI strategy in the Nordics could explicitly include partnering with local AI hubs or consortiums (for example, Finland’s AI Finland initiative or Norway’s AI Sandbox) to stay at the cutting edge.

Use Case Focus

Nordic enterprises often champion use cases with societal impact. Energy companies use AI for sustainability (smart grids, reducing waste), healthcare providers use AI to improve patient care in remote areas, and public sector agencies explore AI assistants for citizen services in Finnish or Norwegian.

Highlighting such use cases can rally internal support – employees and stakeholders feel the AI strategy isn’t just about profit, but also doing good, which resonates strongly in Nordic business culture.

Workforce and Unions

The Nordic model also means workers and unions have a voice. When rolling out AI that may affect jobs or work practices, a strategic approach involves early dialogue with employee representatives, clear communication about how AI will augment rather than replace roles, and providing reskilling opportunities. This inclusive approach avoids backlash and taps into frontline insights for better implementation.

By integrating these Nordic considerations, companies ensure their AI strategy is not a siloed tech plan but a harmonious part of their overall corporate strategy and social context.

Strategic Takeaways

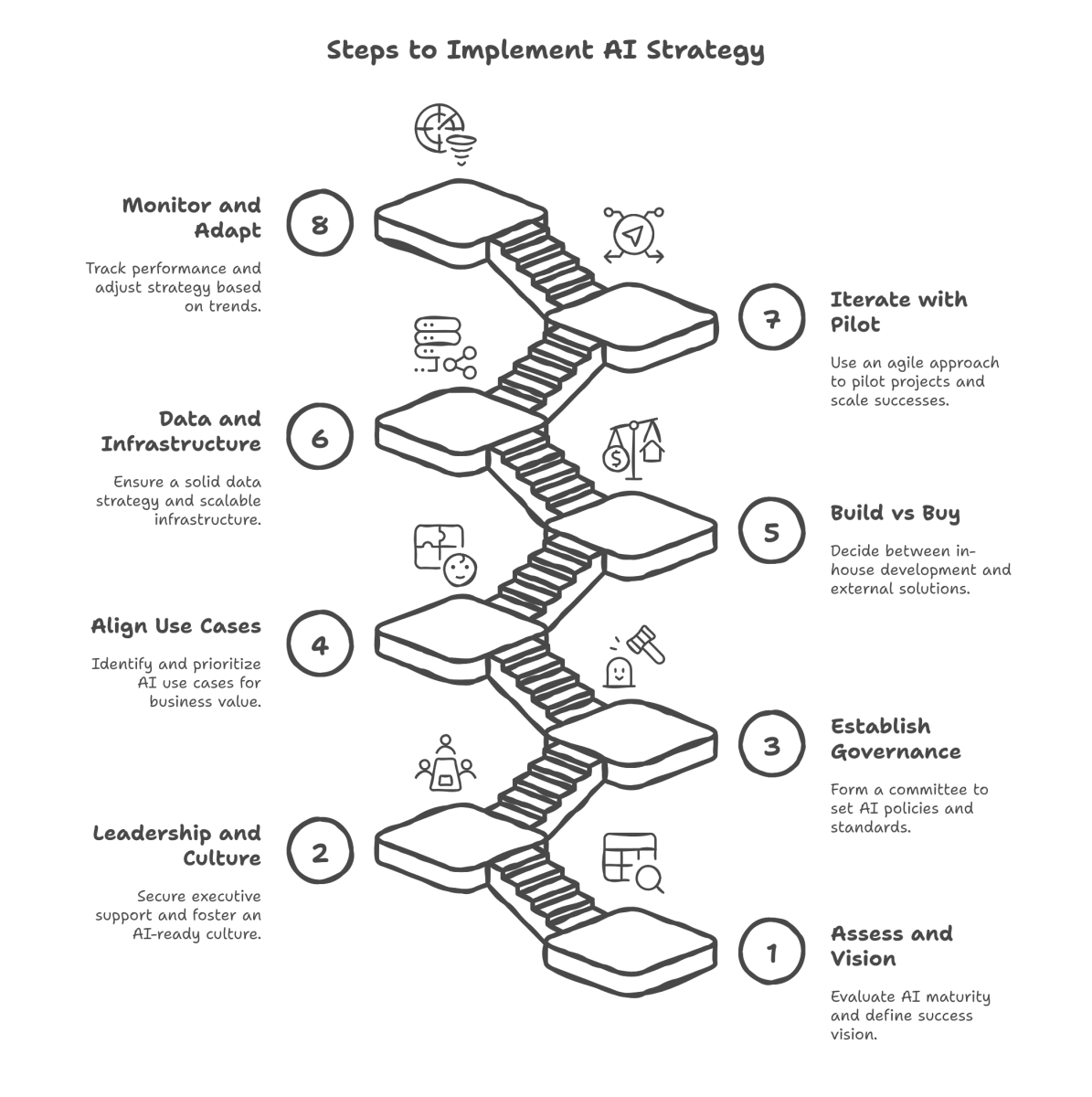

Developing and executing a modern AI strategy is a multidisciplinary endeavor. Here are the practical next steps and takeaways for leaders looking to get it right:

1. Assess and Vision

Benchmark your AI maturity candidly (use models like Gartner’s) and identify gaps. At the same time, craft a clear vision of what AI success looks like for your organization in 2–5 years (e.g. “AI drives 25% of our innovation pipeline” or “Automated customer agents handle 50% of queries with CSAT ≥90%”). This vision, backed by data and examples, will align stakeholders.

2. Leadership and Culture First

Recognize that AI strategy is 80% about people and processes. Secure executive sponsorship (CEO, business unit heads) and communicate that AI is a priority to drive growth and efficiency. Invest in building an AI-ready culture: launch internal AI education programs, highlight quick wins, and address fears through transparency. Empower cross-functional AI champions in each department.

3. Establish Governance Early

Form an AI governance committee or Center of Excellence to start putting guardrails and best practices in place. Develop initial AI policies (responsible AI principles, data usage guidelines, compliance checklists). This body will ensure each new AI project meets quality and ethical standards and help navigate regulations like the EU AI Act proactively.

4. Align Use Cases with Business Value

For each business unit, identify top AI use cases that align with their objectives – and rank them by impact and feasibility. Prioritize a few use cases that can be piloted within 6-12 months for tangible results (e.g. a sales lead scoring model for marketing, a chatbot for IT support). Make sure to define success metrics (ROI, time saved, revenue uplift, etc.) upfront for each.

5. Build vs Buy Decisions

Create a framework (as part of your strategy) to continually evaluate when to build in-house vs. leverage external AI solutions. Start with a survey of existing vendor tools your company is paying for – are you fully utilizing them?

Could extending a current platform achieve a goal faster than building anew? Conversely, identify any core competencies where developing proprietary AI (or fine-tuning an open model on your data) could differentiate you. Plan for flexibility – you might build something now, but remain open to switching to a SaaS product if it matures (or vice versa).

6. Data and Infrastructure Foundations

Ensure a solid data strategy underpins your AI plans. This includes investing in data integration (breaking silos so AI models have rich data), data quality initiatives, and scalable infrastructure (cloud or on-prem GPU clusters) to train and deploy models.

According to research, 80% of companies have developed a data strategy to support AI – this is a prerequisite to get beyond pilot purgatory[50][51]. Identify any gaps like lack of real-time data access or insufficient data engineering talent and address those early.

7. Iterate with Pilot -> Scale approach

Use an agile approach – start with pilot projects, learn from them, and iterate. When a pilot succeeds, have a plan ready to scale it (more users, more use cases, integration into core systems). When something fails, document the lessons and pivot. Many AI initiatives won’t get it perfect the first time; a resilient strategy treats each pilot as a step in a learning journey, not a pass/fail exam.

8. Monitor, Measure, Adapt

Define a set of AI performance and value metrics at the program level – e.g. percent of processes enhanced by AI, AI-driven revenue, model deployment frequency, etc. Track these quarterly as you would sales or operations metrics.

Also keep an eye on external trends (new algorithms, competitor moves, regulatory changes) and be ready to adjust your strategy. Think of the AI strategy as a living document.

Summary

By following these steps, organizations can move beyond sporadic AI experiments to a sustainable, enterprise-wide AI capability. It’s about integrating AI into the fabric of the business – aligning with goals, empowering people, managing risks, and constantly delivering value.

Companies that do this will not only avoid falling behind; they stand to redefine industry frontiers and capture the transformative potential AI holds for the years ahead.

See references

References

- McKinsey – State of AI in 2024 (workplace survey)

- Gartner (via LXT) – AI Maturity Model levels and enterprise adoption

- BCG – AI adoption survey 2024 (global maturity and value)

- Cisco – Balancing AI deployment with privacy and compliance (GDPR, AI Act)

- European Parliament – EU AI Act summary and timeline

- IDC – Building an AI Center of Excellence (benefits & roles)

- RSM – Buy vs. Build AI Tools (criteria)

- LXT Research – AI trends: data strategy, bias progress, ROI of Conversational AI

- Google Cloud – GenAI use case (Cintas internal knowledge search)

- Statistics Finland – AI adoption rates in Finnish enterprises 2024

- Microsoft (Source) – Nordic AI adoption study (Finland vs. Nordics)

- agility@scale blog – Culture and leadership in AI transformation